Proper configuration of your AI Agent is crucial for optimal performance. Each setting affects how your Agent communicates and processes information.

Core Settings

1. Select Language

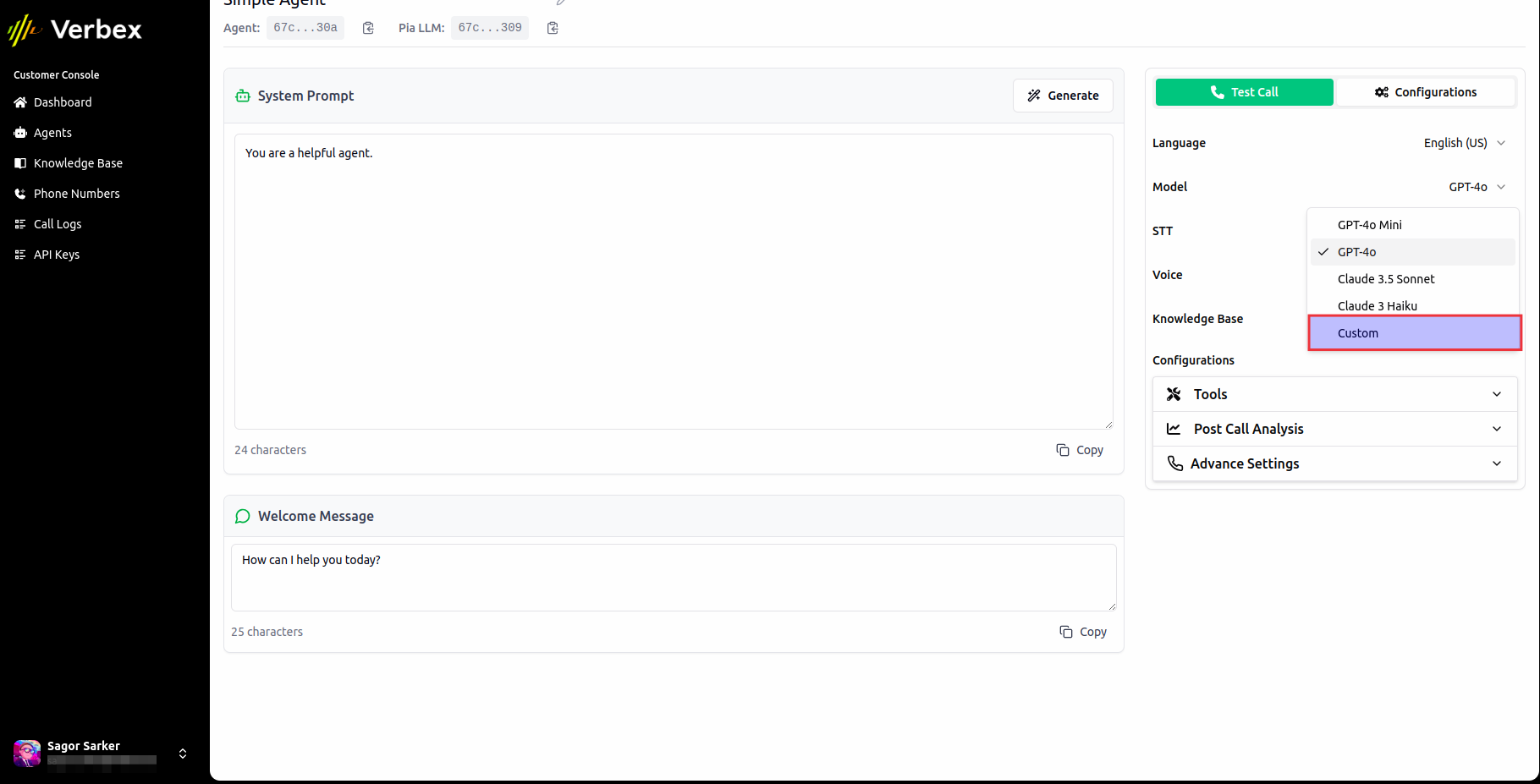

Choose the primary language for your AI Agent’s interactions.2. Select Model

Choose the AI model that will power your Agent’s intelligence.

OpenAI Models

GPT-4.1

Highest intelligence- Ideal for complex tasks and advanced instruction following

- Supports up to 1 million tokens for extensive context handling

- Excels in reasoning, and long-context comprehension

- Suitable for use in Agentic AI Agents

- Best choice for tool integration and sophisticated applications

GPT-4.1-mini

Balanced intelligence and performance- Faster responses with lower latency compared to GPT-4.1

- Maintains support for up to 1 million tokens

- Handles moderately complex instructions effectively

- Suitable for applications requiring a balance between performance and cost

- Can be used in Agentic AI Agents

GPT-4.1-nano

Lightweight and cost-effective- Fastest response times among the GPT-4.1 models

- Supports up to 1 million tokens for context

- Optimized for simple tasks like classification and autocomplete

- Not suitable for complex instruction following or tool integration

- Best for applications where speed and cost are prioritized over advanced capabilities

GPT-4o

Higher intelligence- First for use with complex instructions in the system prompt of a Simple AI Agent. In an Agentic AI Agent, this model is used by default

- Slower responses, higher latency

- Better understanding

- Higher accuracy, handles out of context queries better

- Optimal for using with tools

GPT-4o Mini

Lower intelligence, budget-friendly- Faster responses, lower latency

- Simple system prompt with less complex instructions

- Limited performance with tools

- Best for simple queries

- Not supported in Agentic AI Agents

Realtime Models

Realtime models are optimized for low-latency, real-time conversational experiences. These models enable natural, fluid interactions with minimal delay.OpenAI Realtime Models

GPT-Realtime

Latest OpenAI realtime model- Ultra-low latency for real-time voice conversations

- Optimized for natural, fluid dialogue

- Supports streaming responses with minimal delay

- Ideal for voice-based AI agents and live customer interactions

- Handles context switches and interruptions gracefully

GPT-Realtime-2025-08-28

Dated version of OpenAI realtime model- Specific snapshot of the realtime model from August 28, 2025

- Provides consistency for applications requiring a fixed model version

- Same low-latency capabilities as GPT-Realtime

- Use when you need version stability and predictable behavior

GPT-4o-Realtime-Preview

Preview version of GPT-4o realtime capabilities- Combines GPT-4o intelligence with realtime processing

- Enhanced reasoning capabilities in real-time scenarios

- Better handling of complex, multi-turn conversations

- Preview status means features and performance may evolve

Google Gemini Realtime Models

Gemini-2.0-Flash-Live-001

Google’s fast realtime model- Optimized for speed and low latency

- Excellent for live voice interactions

- Fast response times suitable for conversational AI

- Supports multimodal inputs in real-time scenarios

Gemini-Live-2.5-Flash-Preview

Enhanced preview version of Gemini Live- Latest preview of Google’s realtime capabilities

- Improved performance and features over 2.0

- Better context understanding in live conversations

- Preview status indicates ongoing improvements

Realtime models are specifically designed for voice-based AI agents and scenarios requiring immediate responses. They prioritize low latency and natural conversation flow over complex reasoning tasks.

Groq Models

Groq models are open-source models powered by Groq’s high-performance inference infrastructure, offering exceptional speed and cost-effectiveness.Groq Llama 3.3 70B Versatile

High-performance open-source model- 70 billion parameters with optimized transformer architecture

- 128K token context window for extensive context handling

- Strong instruction following and tool use capabilities

- Fast inference powered by Groq’s infrastructure

Groq Llama 3.1 8B Instant

Fast and budget-friendly open-source model- 8 billion parameters optimized for instant responses

- 128K token context window

- Ultra-fast inference for real-time applications

- Ideal for applications requiring quick responses without complex reasoning

Groq models leverage Groq’s specialized LPU (Language Processing Unit) architecture to deliver exceptional inference speed, making them ideal for high-throughput applications and real-time use cases.

Verbex Models

Verbex Bangla Mini

Bengali-specialized model- Optimized for Bengali language tasks and tool-calling scenarios

- Handles Bengali text and tool interactions effectively

- Suitable for building conversational agents that need to interact with external systems in Bengali

- Faster than GPT-4o, GPT-4.1 model

- Best for Bengali language tasks and tool-calling scenarios

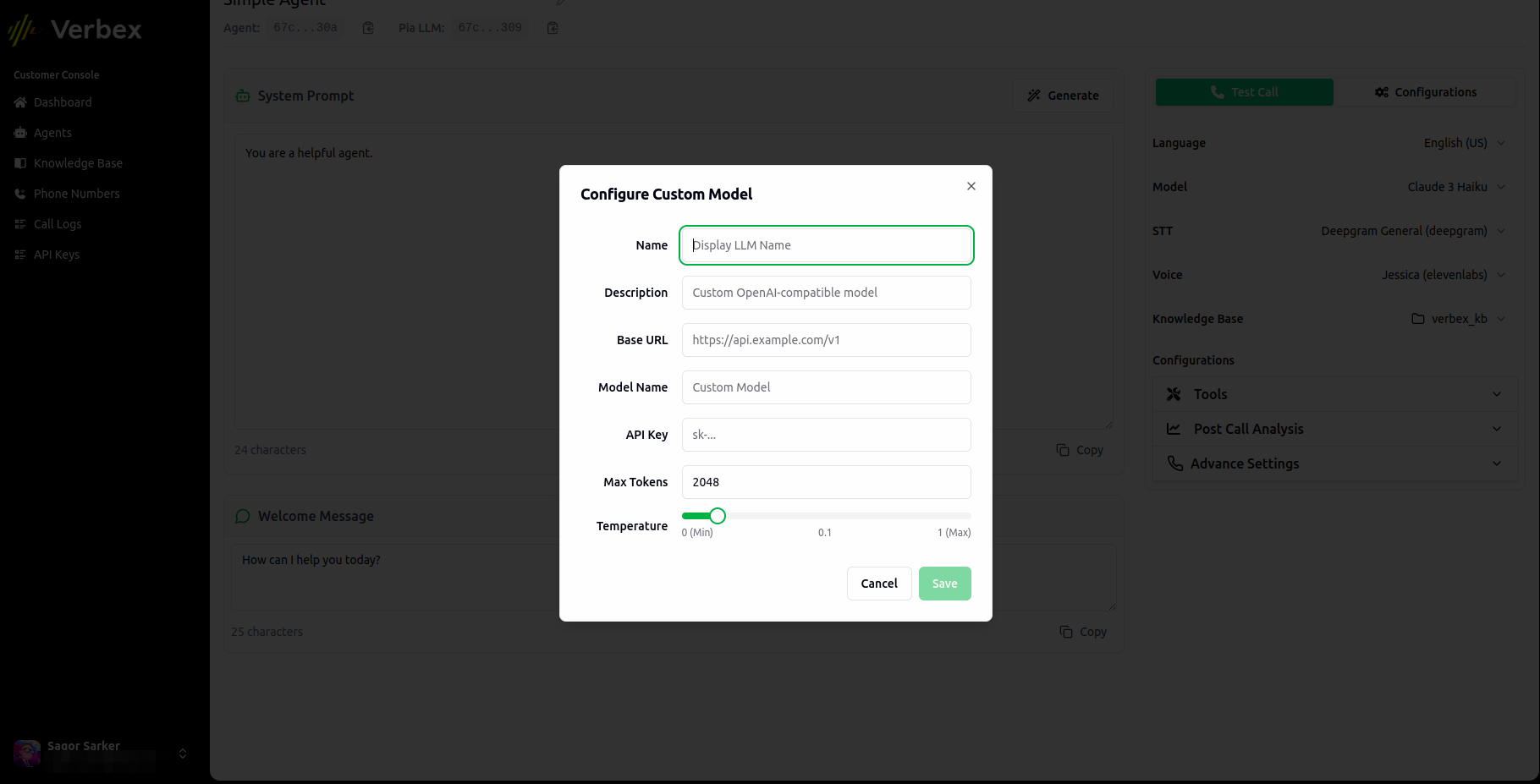

Custom Model

Verbex support OpenAI compatible custom LLM models. You can use any LLM that is supported by OpenAI compatible models. Before adding a custom model to Verbex, keep in mind that:- The model should be OpenAI compatible.

- The model should support streaming responses.

- The model should support tool calling.

We plan to add models like Anthropic Claude, Google Gemini, and more in the future.

3. Select STT

Select the STT module that will convert user speech into text.The STT module is crucial for accurate transcription of customer speech, directly impacting your AI Agent’s ability to understand and respond appropriately.

4. Select Voice

Choose a voice that represents your brand and resonates with your audience.When selecting a voice, consider:

- Language compatibility

- Gender preference

- Accent appropriateness

- Speaking style

- Brand alignment

Configuration Best Practices

Language & Region

Match your target market’s primary language and regional preferences

Model Selection

Balance performance needs with budget constraints

Voice Choice

Align voice characteristics with brand identity

STT Accuracy

Test STT performance with your typical use cases

Performance Considerations

Model Comparison

| Model | Performance | Cost | Best For | Tool Calling |

|---|---|---|---|---|

| GPT-4.1 | Highest | Highest | Complex tasks, extensive context (1M tokens), agentic AI | Excellent |

| GPT-4.1-mini | High | Moderate | Balanced performance and cost, agentic AI | Excellent |

| GPT-4.1-nano | Moderate | Low | Simple tasks, classification, autocomplete | Limited |

| GPT-4o | High | Higher | Complex interactions, tool-based operations | Excellent |

| GPT-4o Mini | Moderate | Lower | Basic queries without tools | Limited |

| GPT-Realtime | High | Higher | Real-time voice conversations, live interactions | Excellent |

| GPT-4o-Realtime-Preview | High | Higher | Real-time with enhanced reasoning | Excellent |

| Groq Llama 3.3 70B | High | Low | Complex reasoning, coding, tool use (cost-effective) | Excellent |

| Groq Llama 3.1 8B | Moderate | Lowest | Fast responses, simple tasks (ultra cost-effective) | Good |